I’ve super enjoyed and consider it a life-highlight and great privilege, to have been at code.org full-time the last 2 years. As a child growing up in a rural village in Chile in the 1970s i had the *incredible* privilege to have been exposed to computer science education at a formative age. I learned Basic programming on a Apple II computer with the help of my teacher at the local rural public school in Zapallar. This was in the era of the Pinochet military dictatorship, in a small town only accessible by dirt roads (that closed during rain washouts), where electricity was intermittently available and general infrastructure was poor. My papa had brought a Apple II to town from the USA and was using at his business for basic accounting in Visicalc. My school teacher, learned the computer, and taught me what he had learned. My privilege was 3x: access to a computer, access to a teacher, and the time to learn the foundational skills of computational thinking that are central to Computer Science and coding. Among the single most important educational experiences of my life which formed a platform for how i think, feel, and have navigated the world professionally and personally.

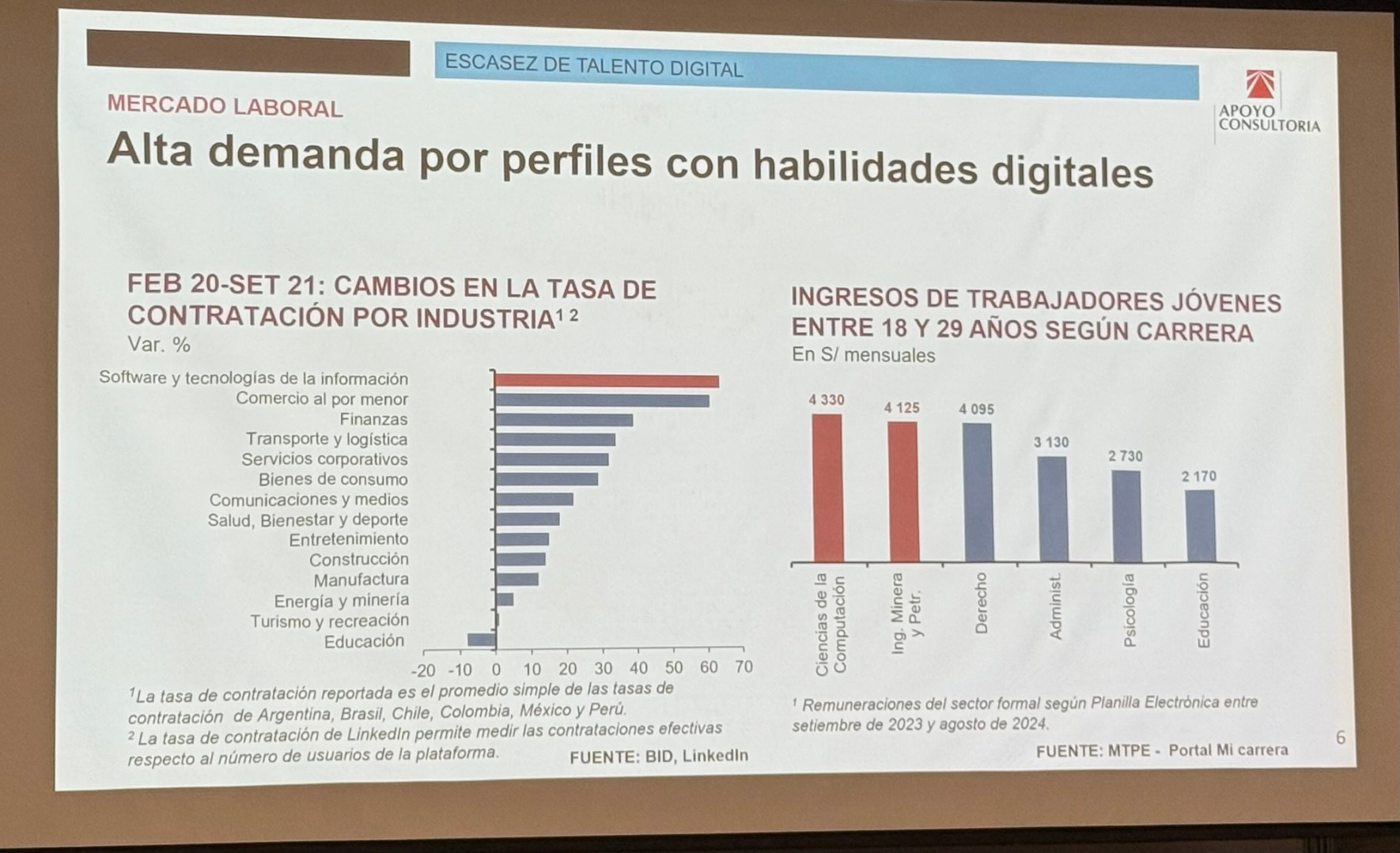

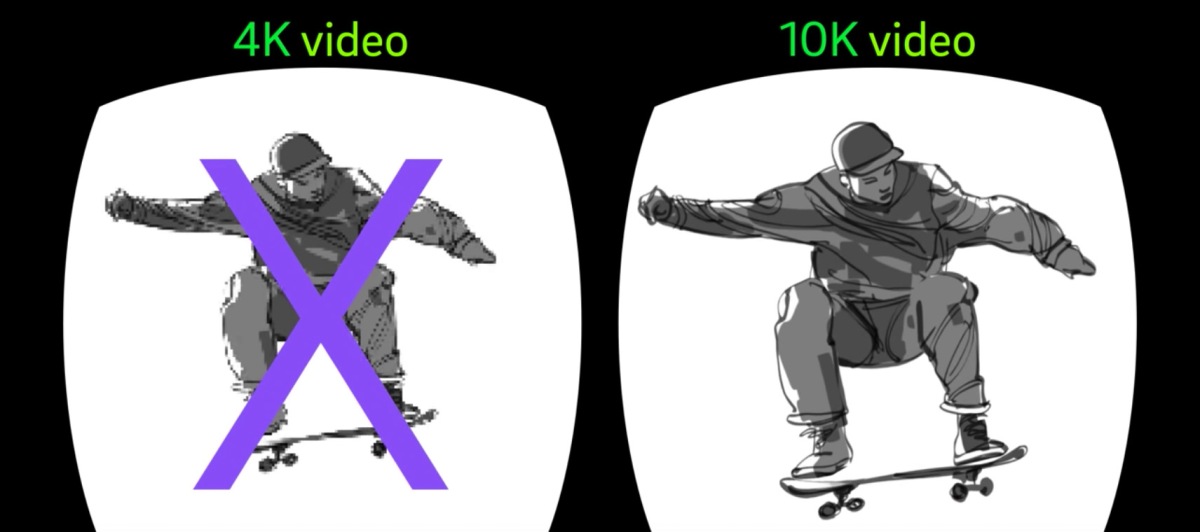

When I started talking to Code.org about joining in a leadership role I was drawn to the “global” aspect of the opportunity–to help reach children in countries with varying challenges that I could relate to from my personal experience in Chile. Mexico, Peru, Brazil, Colombia, Argentina–countries I know well from personal travel and some prior business experience in my software career, have *very* different classroom contexts than those of USA k-12 schools. Much lower prevalence of computers for individual student learning, and very often lacking in internet connectivity (and sometimes, dependable electricity). But just as importantly–a less obvious connection between CS education and the impact on a students life?

CS is not about “getting a job as an engineer”. CS is a *much* broader foundation for logical thinking that helps a student learn generally to gather, decompose, process, and create systems/process (algorithms) to solve problems. How does a CS education benefit a young girl in a rural village? I believe it will benefit her in all of her academic studies in the same way that basic numeracy and literacy are foundational, and will be applicable in her life not only as a ubiquitous user of 21st century technology (smartphones!) and also her professional life in any field: agriculture, manufacturing, transportation, hospitality, the small family business at the market, et.

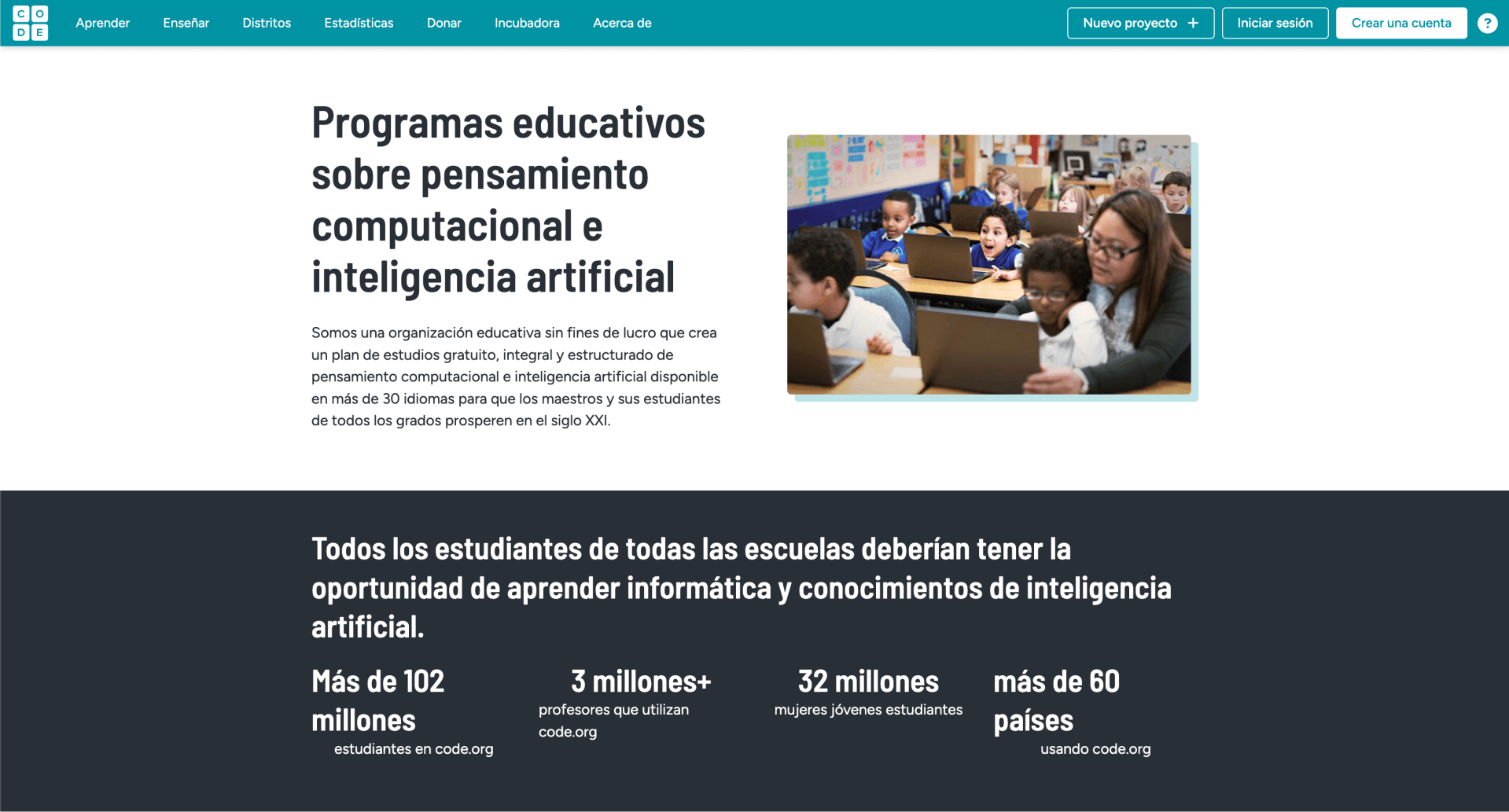

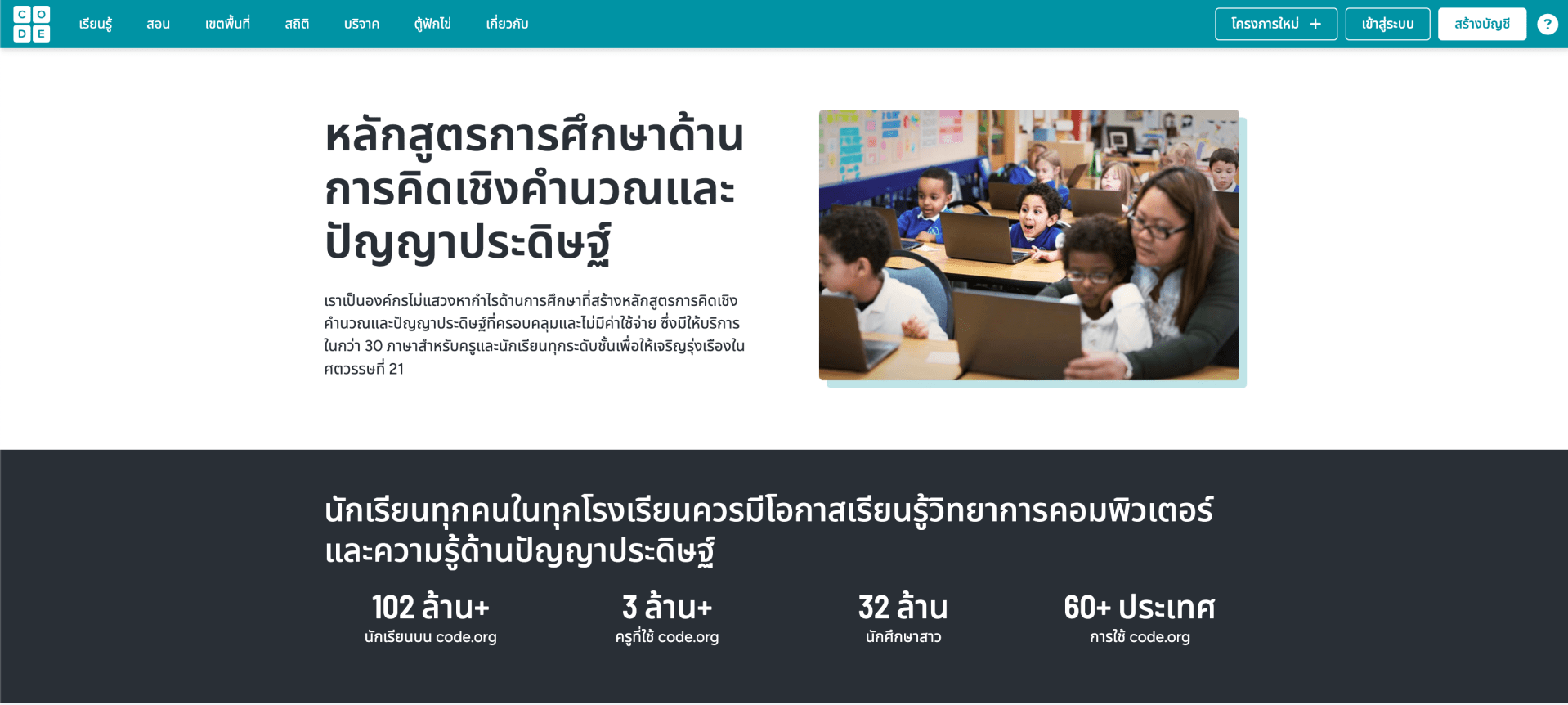

As the leader of Code.org’s global team I got to work with an amazing group of multicultural colleagues from Chile, Korea, Peru, Slovakia, and India. Our small but mighty team carried the mission forward from other colleagues that came before us in the 2019-2023 era which translated key elements of our curricula and lessons, and reached well over 100,000,000 students around the world! Amaze-ballz stuff.

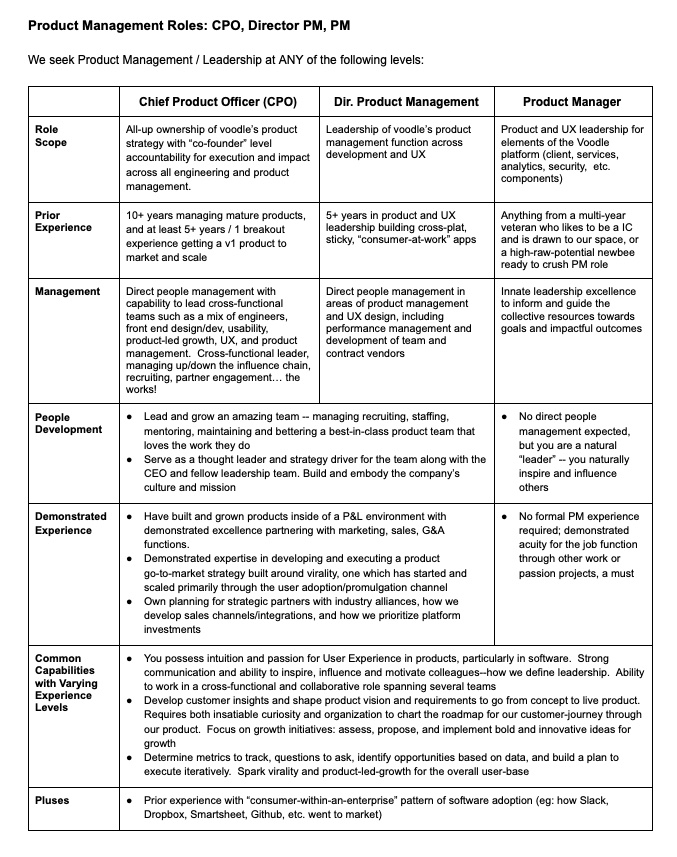

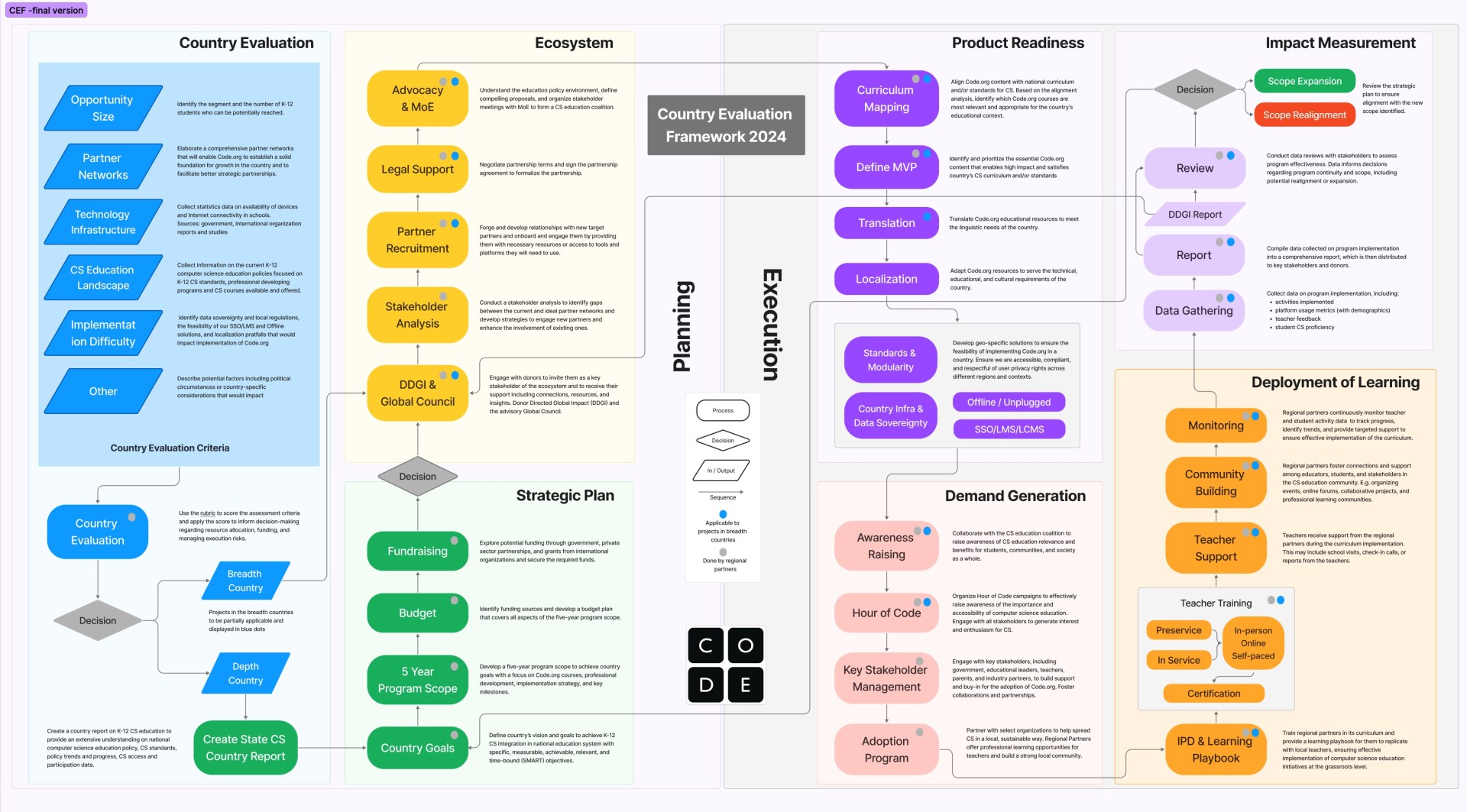

One of the first programatic value-ad projects I brought from my experience as a product manager in commercial software, was to lead our team towards a consensus super-view of all the market readiness elements which we have found to be end-to-end required for a transformational implementation of a national CS education framework and student-impacting curricula. We called this our Country Evaluation and Execution Model, and my colleague Doyeon came up with an awesome uber flow-chart that captured our institutional insights. Here’s the model:

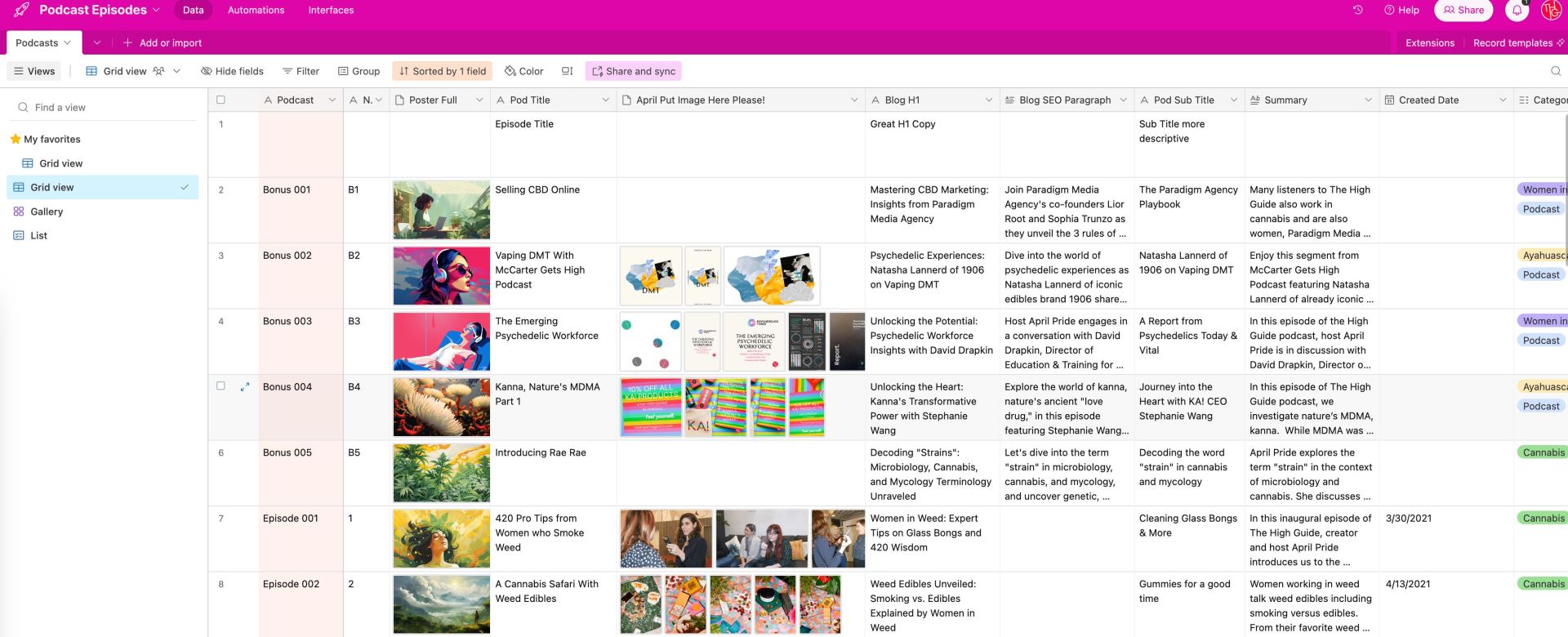

The fun part was using this model to then “audit” each of our currently active countries to see which pieces of these programs were in place, essentially the “market readiness”. We asked our more than 100+ regional partners to contribute local-insights to these assessments and then generated country-by-country CEEM snapshots. The white-spaces are gaps in systems/programs that we deem essential for successful implementation of CS education for students. No bueno!

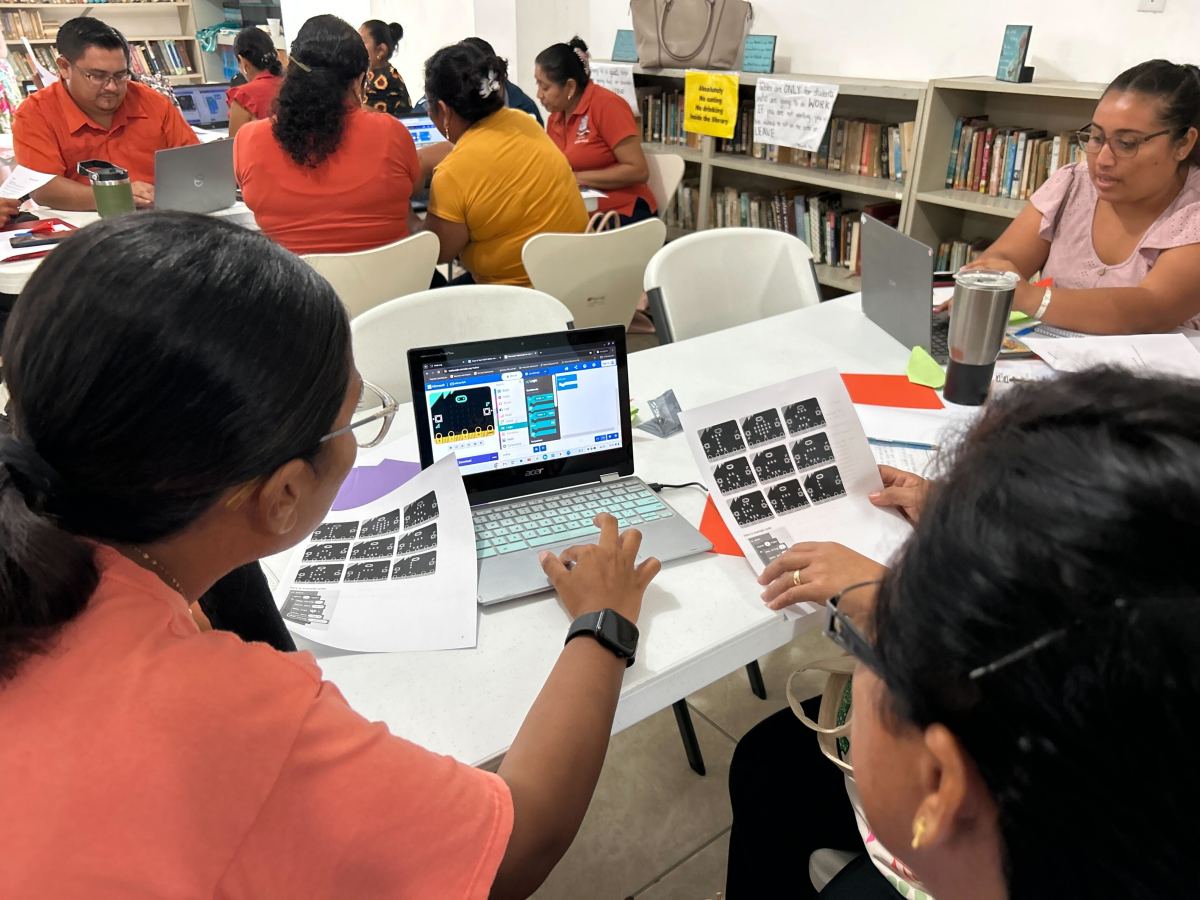

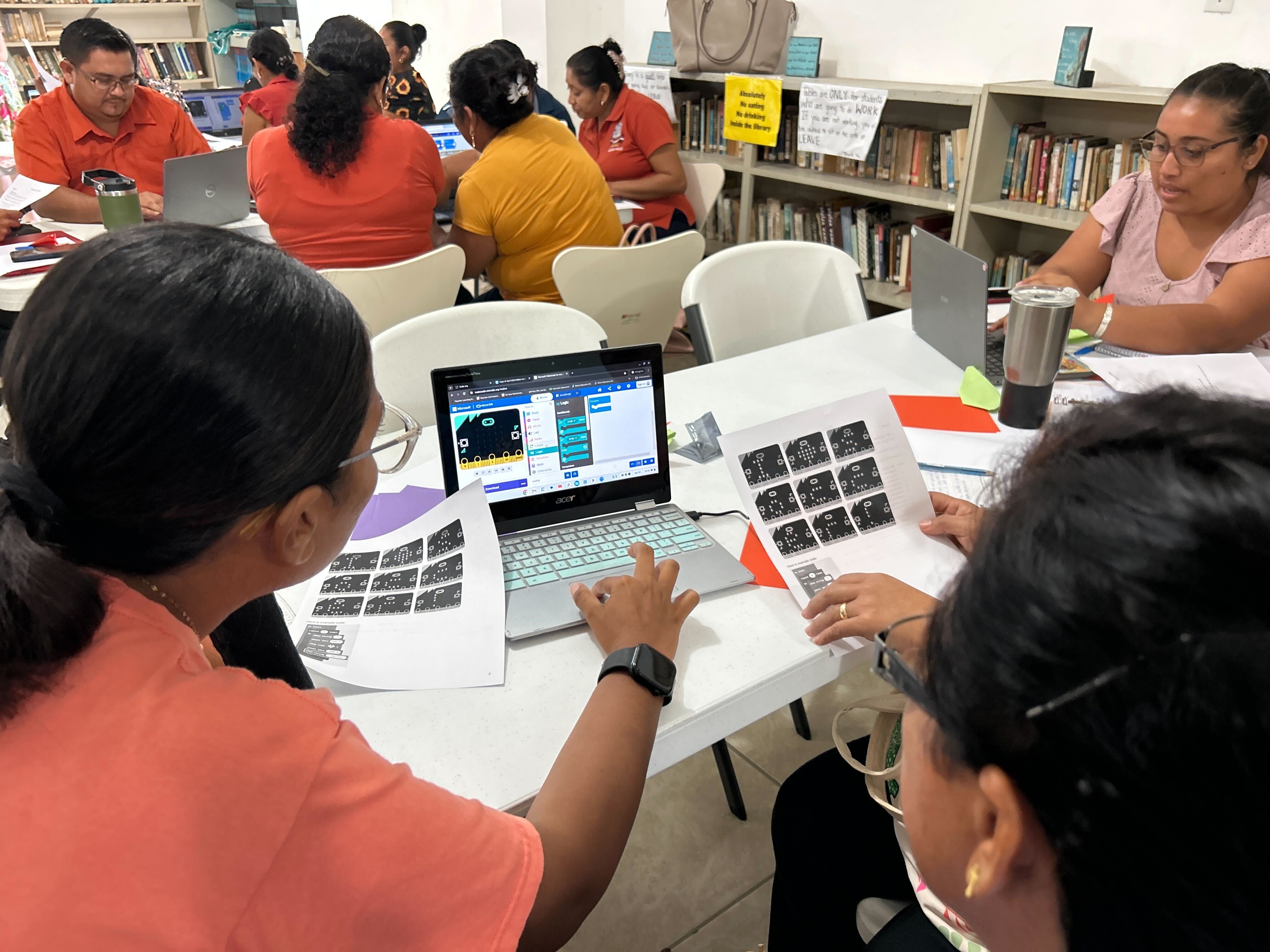

What emerged from this exercise is that our market readiness was spotty and incomplete in the vast majority of markets. Code.org had made primary investments in the USA ecosystem (our home country where our foundation is based and our donors and activities have prioritized 99% of our time and resources). Just having translated curricula is far from enough to drive changes across ministry of education policy priorities, curricula mapping to federal or state requirements that drive teacher and principal’s prioritization of courses, teacher training and development to build capacity (crucial!), infrastructure for classrooms, and assessment and data gathering to measure outcomes. This complexity of programs eludes most of our partners which don’t have the same level of donor support in their home-countries.

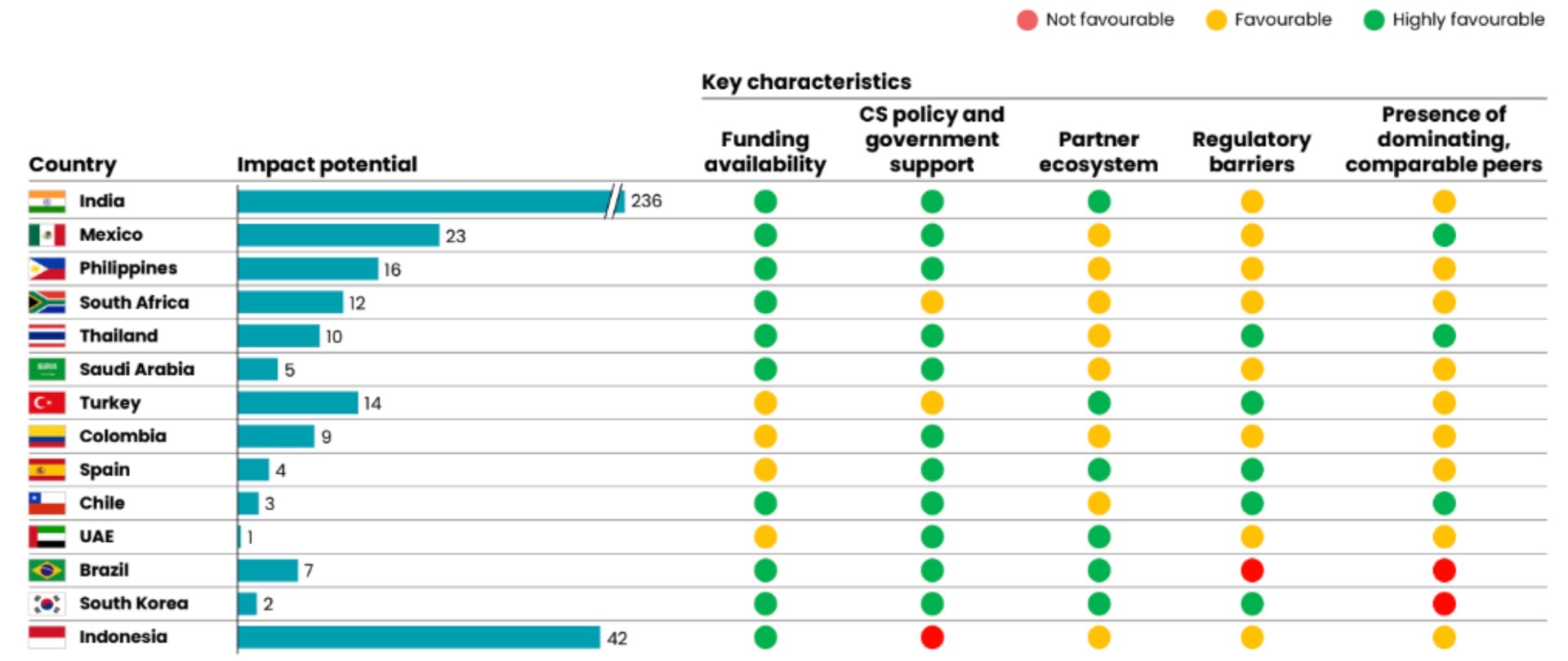

How could we maximize reach to benefit as many students as possible in our next phase of our mission, particularly as AI technologies and the need for AI Literacy are rapidly transforming all of education, and computational thinking and coding in particular? We had the help of a wonderful pro-bono team from McKinsey that helped us devise a country assessment rubric to prioritize our investments into high-impact, most-ready countries. The breadth and depth of their research was fantastic and I include just the simplest high-level slide to illustrate the themes of the rubric:

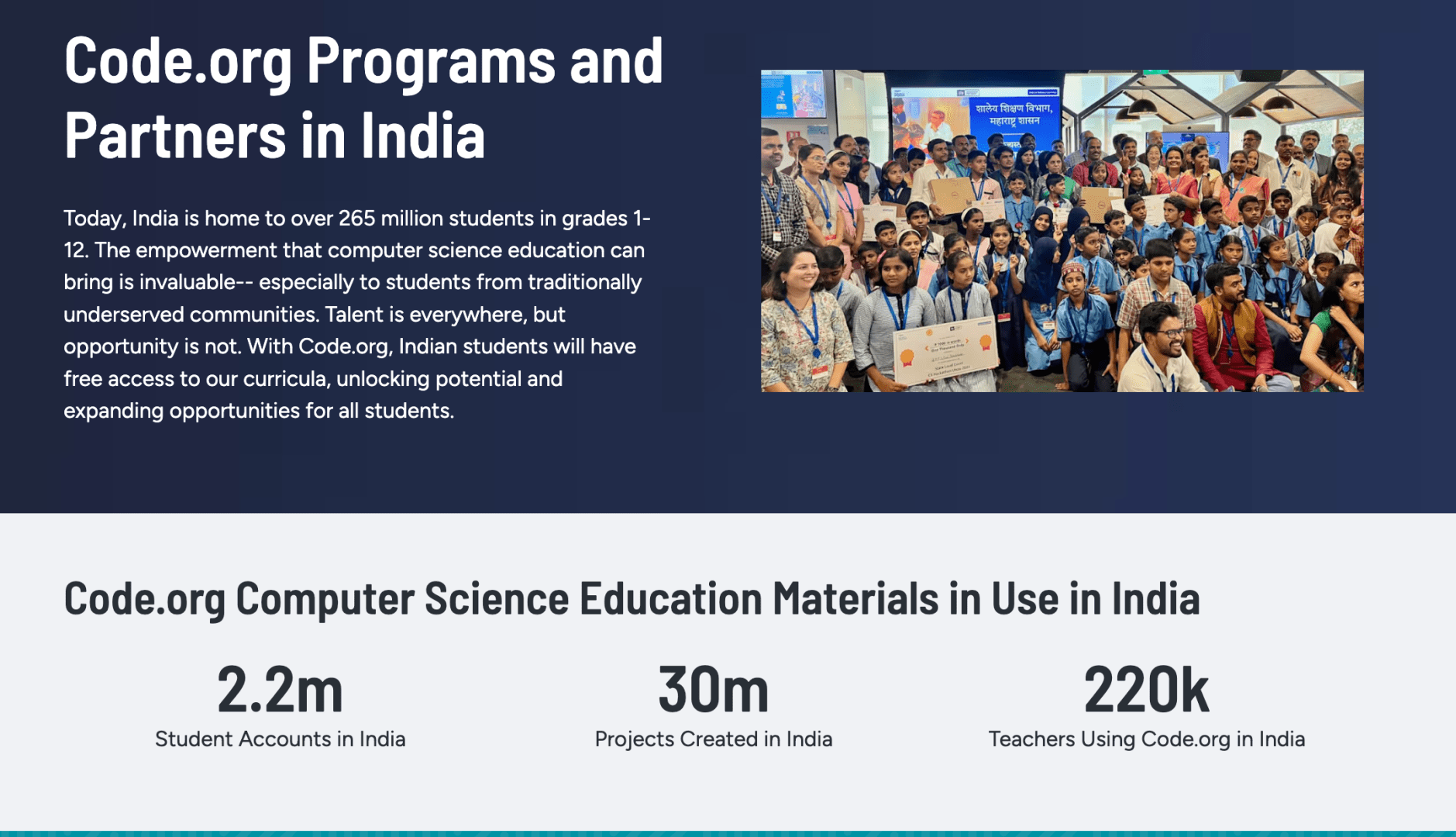

What emerged right at the tippy tippy top of the opportunities do help children in the world = India.

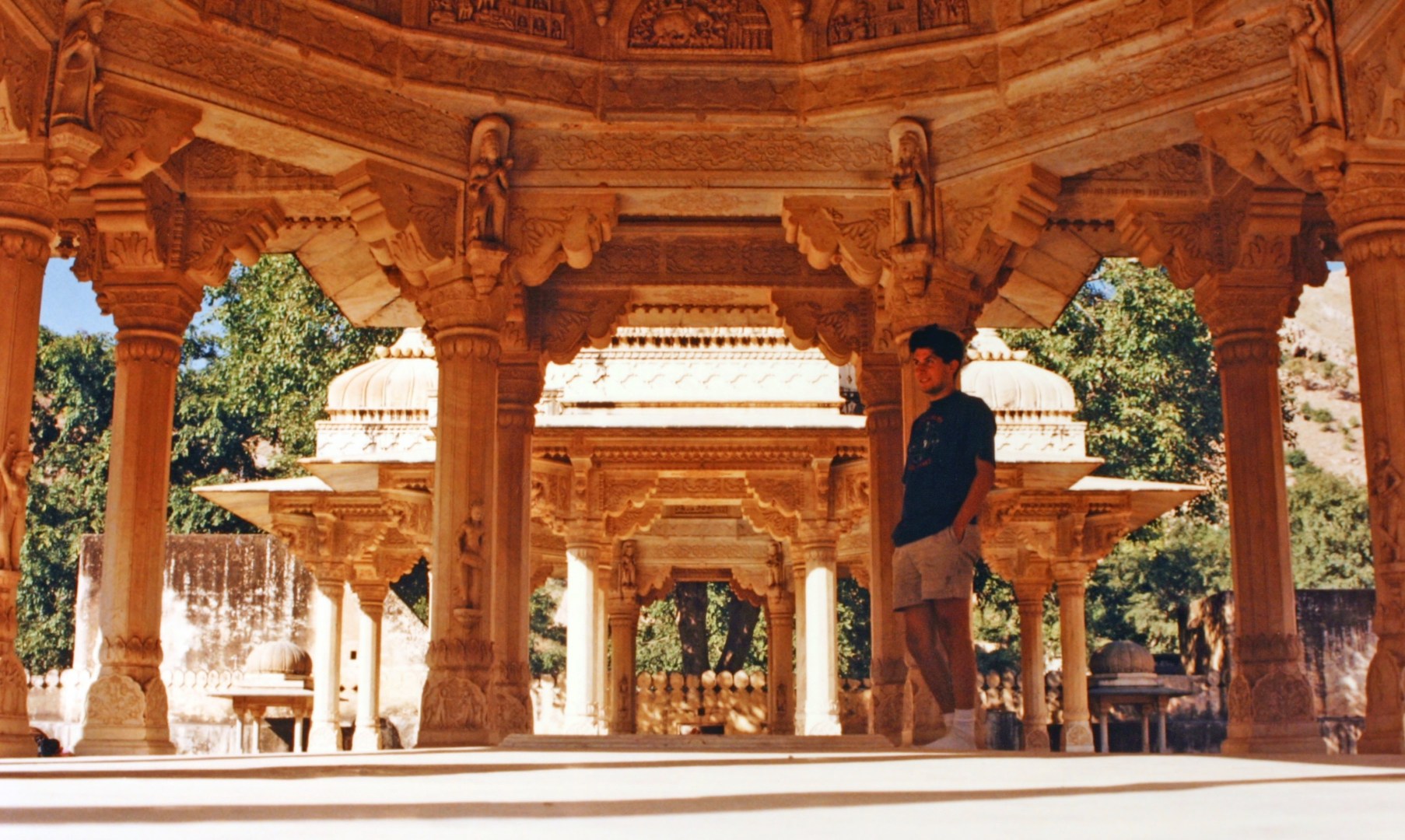

I first visited India in 1993 when i was a recent college grad and had not a care in the world and the privilege of $3000 that I had saved up working as an assistant editor on music videos and commercials while in college in Los Angeles. Those savings propelled me and a friend across northern India on a rigid budget of $10 a day–which we maximized in Darjeeling, Varanasi, Agra, Delhi, Jaipur, Jodhpur, and Jaisalmer among other wonderful cities and villages. Of course I adored every moment of every day. The bustling beauty of all the people, the food, the music, natural wonders, and history (which i had studied in college).

Over the last year my focus with the organization has been to seek a founding Managing Director who would partner with us to found a new Indian non-profit, and partner with us to build a “code.org of India” that can leverage the collected insights and expertise of our movement in other markets, but build them in a entirely localized context with a Indian team, for the Indian student, in a entirely native Indian way. Saleem joined us in October 2025 and working closely with him over the last 4 months sharing everything I have learned and could offer as catalyst inputs has been my #1 project.

With Saleem firmly in place I’m now transitioning from a full time position with Code.org into an ongoing advisory volunteer role as Secretary of a Global Advisory Council that will support Saleem and his team in India with a collection of mentors from traditional business, enterprise software, education, and other sectors. This is new and exciting. Code is actively building a network of donors and advocates among the Indian Diaspora tech-community here who will directly support Code.org’s impact reaching 10s of millions of students in India.

To be continued with details soon!